A Quickstart Guide to Semantic SEO with JSON-LD and Schema Markup

“Documents can’t really be merged and integrated and queried. But data are protean, able to shift into whatever shape best suits your needs.”

Aaron Swartz

Preface

While discussions about Semantic SEO are easy to come by online and in search conferences, I couldn’t find a single resource that gave me enough foothold to properly start to run with it. This document is my attempt to create a comprehensive training guide I wish I’d found when I started.

Semantic SEO works by linking and publishing metadata and making it available to external users – people, web crawlers, and graph-based knowledge repositories, also known as knowledge graphs. Linked data publishing allows for machine learning algorithms to execute semantic queries and generate SERP features, which in turn supports traffic acquisition.

I will unpack the notion of entity-oriented search and will provide you with schema markup and JSON tutorials which will allow you to:

- Write JSON-LD, deploy schema markup, and build semantic nodes.

- Create entities and list them in the knowledge graphs.

- Map out an entity-oriented and a SERP-based strategy.

- Optimize content with natural language processing (NLP) tools.

If you don’t have the time to learn how to write JSON-LD you can jump to the simple method and generate the code with online tools. For better context, I would still recommend reading the Introduction.

This guide is a work in progress so if you identify inaccuracy, omissions, oversights, or any other mistakes please contact me at ivannonveiller@gmail.com and I will make sure to stand corrected.

My understanding of semantic search has benefited greatly from the synthetic work of Krisztian Balog and my exchange with Dixon Jones who offered a coherent and comprehensive overview of the state of the art.

Table of Contents:

- Preface

- Table of Contents

- Introduction

- Entity-oriented search

- What is an entity?

- Synthetic Queries and Augmentation Queries

- Word2Vec

- Entity Ranking

- Entity Linking

- Populating Knowledge Bases

- Understanding Information Needs

- The Anatomy of an Entity Card

- Synthetic Queries and Augmentation Queries

- How to analyse text with Google’s Natural Language API

- How to use Google Trends for entity research

- How to find entities with the Knowledge Graph Search API

- SERP Optimization for Brands

- Competitor analysis

- Track the Knowledge Graph presence with Authoritas API

- Personal Assistant Search Optimization

- Why JSON-LD

- The syntax of JSON

- Write the JSON-LD code

- Choose a schema type and its properties

- LocalBusiness & Organisation

- FAQPage, HowTo & Speakable

- Rating, AgreggateRating & Review

- Product & Offer

- Article

- BlogPosting

- Service Schema

- SpeakableSpecification

- CollectionPage

- Person

- VideoObject

- SiteNavigationElement

- SearchAction

- Breadcrumb

- ItemList for Category Pages

- Ecomm marketplace with multiple sellers for single product

- AboutPage

- ContactPage

- JobPosting

- Simple method:

- Deploy the code

- Monitor the business impact

- Cases Studies

- Google Patents

- Tools

- Ressources

Introduction

You probably started looking into schema markup in order to optimise SERP presence of a company with knowledge cards, rich cards, answer boxes, featured snippets and other rich results such as these:

As an SEO or a digital marketer I will argue that the process of defining and connecting your webpages with semantic markup is a valuable activity for two reasons:

First, it’s both an efficient marketing tactic.

Content discovery and improved SERP-placement leads to higher click-through-rates, deeper engagement and improved brand awareness. You can take a look these case studies including this Schema App study that showed a 160% increase in YoY click volume after the SAP website was marked up.

Additionally, the web is rich with accessible, high-potential opportunities for rich results, especially for long tail keywords. Over 25% of all web queries mention or target specific entities. Only 1/3rd of websites contain defined entities. Only 12.29% of search queries trigger rich results.

Moreover, the growing importance of semantic markup is supported by the record global smart speakers and virtual assistants sales, as the number of units sold grew to 147 million in 2019. Hands-free voice command is becoming a significant way consumers perform search (specially in the 26-35 age group) and 40.7% of all voice answers come from rich or featured snippets.

Second, it represents an opportunity to play a transformative role in the semantic web environment. A marked up web document, originally designed for human consumption, becomes a machine-understandable data set. Schema markup allows you to create nodes that can be mapped and processed by the semantic web and by the Google’s Knowledge Graph.

Or, as Aaron Swartz put it:

“Documents can’t really be merged and integrated and queried; they serve mostly as isolated instances to be viewed and reviewed. But data are protean, able to shift into whatever shape best suits your needs.”

In other words it is how documents:

…are plugged into a metadata ecosystem or a Giant Global Graph:

This is the Linked Open Data Cloud, a continuously updated visualization of the web’s connected data. Pink circles represent user generated data, often annotated with schema.org

Semantic Networks & the Semantic Web

A semantic network is a graphic depictions of knowledge that can be organized into a taxonomy. It has been used in mathematics, psychology, and linguistics since Euler’s solution of the Königsberg bridge problem in 1736.

Semantic networks became popular in artificial intelligence and natural language processing in 1960s.

The Semantic Web is an extension of the existing Web where machine-readable data, is layered over the information that is provided for people. The advantage of semantic data is that software can process it.

The history of efforts to enable Web-scale exchange of structured and linked data dates back to 1990s. A 2001 Scientific American article by Tim Berners-Lee et al., was probably the most ambitious view of this program. He imagined a web of linked data, where semantics, structure, and shared standards would allow humans to communicate with intelligent machines in order to access automated services. Berners-Lee originally expressed his vision of the Semantic Web in 1999 as follows:

“I have a dream for the Web [in which computers] become capable of analyzing all the data on the Web – the content, links, and transactions between people and computers. A ‘Semantic Web’, which should make this possible, has yet to emerge, but when it does, the day-to-day mechanisms of trade, bureaucracy and our daily lives will be handled by machines talking to machines. The ‘intelligent agents’ people have touted for ages will finally materialize.”

Another advocate of the semantic web was Aaron Swartz, co-founder of reddit, political organizer and hacktivist who was instrumental in the fight against SOPA. In his unfinished A Programmable Web he wrote:

“The Semantic Web is based on bet, a bet that giving the world tools to easily collaborate and communicate will lead to possibilities so wonderful we can scarcely even imagine them right now.”

History of the Knowledge Graph: a solution to Google’s scale problem

The first-ever website on the Internet, info.cern.ch was published by Tim Berners-Lee on August 6, 1991. Over the next two decades hundreds of trillions of webpages got stored on over 8M servers. Even though Google indexes only a small pourcentage of them, Google’s ranking models were quickly facing a scale problem. By 2005, a Browseable Fact Repository emerged as Google’s single-point of truth data base solution. Google started to move from indexing URLs to indexing data. Knowledge graphs emerged quickly as the most popular semantic metadata organizational model for both commercial and research applications. Since then, the distinction between semantic networks and knowledge graphs was blurred.

In 2006, Google patented a Browseable Fact Repository, an early version of Google’s Knowledge Graph. It was built by the Google Annotation Framework team headed by Andrew Hogue. The idea was to start indexing data instead of URLs and match them against entities listed in a single-point of truth data-base.

At the same time Danny Hillis and John Giannandrea founded Metaweb Technologies which developed Freebase, an online collection of structured data harvested from multiple sources, including user-submitted wiki contributions.

Metaweb was acquired by Google, in 2010, and its technology became the basis of the Google Knowledge Graph. John Giannandrea was hired by Google. He was appointed as Head of Search in 2016. In 2018 he moved to Apple and was appointed as Senior VP of ML and AI strategy. He is currently in charge of Siri and probably of the upcoming Apple Search Engine.

One of the challenges in 2000s was to enable different applications to work easily with data from different silos. Text search, with its wide coverage well beyond narrow verticals, emerged as the ideal candidate for a common ontology that allows both humans and machines to understand.

In 2011, major search engines Bing, Google, and Yahoo (later joined by Yandex) presented webmasters with Schema.org, a standardized semantic vocabulary of tags (or microdata) used to mark up web content and make it machine-readable.

In December 2012, Ray Kurzweil was personally hired by Google co-founder Larry Page as Director of Engineering to “work on new projects involving machine learning and language processing“. He contributed to the Hummingbird algorithm that placed greater emphasis on NLP and entities annotated by the Knowledge Graph.

The Knowledge Graph is a database that provides descriptions of real world entities and their interrelations, or in other words answers not just links. It leveraged DBpedia and Freebase as a data source and later incorporated content annotated with Schema.org. Google won’t say what percentage of queries evoke a Knowledge Graph answer but seems comfortable with a ballpark estimate of about 25%.

Today, major companies, such as Facebook, Airbnb, Amazon and Uber have created their own “knowledge graphs” that power semantic searches and enable smarter processing and delivery of data.

In July 2020, the Wikimedia Foundation announced Abstract Wikipedia, based on a 22-page paper by Denny Vrandečić, founder of Wikidata. It would allow contributors to create content using abstract notation which could then be translated to different natural languages, balancing out content more evenly, no matter the language you speak.

Semantic Web is slowly growing even though benefits of developing for the Semantic Web are not always immediate, or visible. We all stand to gain from incorporating semantic markup into our web pages, as every site that does strengthens the foundations of an open, transparent, decentralized internet.

Semantic SEO emerged as a practice shortly after the publication of the 2015 patent: ‘Ranking search results based on entity metrics‘ which described how, in some instances, search results are based on entities found inside of Google’s Knowledge Graph. The meaning and relationships of entities on web pages are communicated to knowledge graphs via search engines, with a machine-native meta-data vocabulary called schema.org. The simplest and most popular encoding methodology used for schema.org is JSON-LD (RDF in its developer-friendly form).

Datasets and Knowledge Bases

Traditionally we distinguish between three main types of data:

Unstructured data (i.e. the web): is most of you think of as web: can be found in vast quantities in a variety of forms: webpages, spreadsheets, emails, blogs, tweets, medical records, etc. Without making any assumptions about the format, all these may be treated as textual documents, i.e., a sequence of words.

Semi-structured data (i.e. Wikipedia) is characterized by the lack of rigid, formal structure. Typically, it contains tags or other types of markup to separate textual content from semantic elements. Semi-structured data is “self-describing,” i.e., “the schema is contained within the data and is evolving together with the content”.`

The value of links extends beyond navigational purposes; they capture semantic relationships between articles. In addition, anchor texts are a rich source of entity name variant.

Structured data (i.e. knowledge graphs): adheres to a predefined (fixed) schema and is typically organized in a tabular format—think of relational databases. The schema serves as a blueprint of how the data is organized, describes how real-world entities are modeled, and imposes constraints to ensure the consistency of the data.

Entities are represented in knowledge repositories and knowledge bases. Krisztian Balog defines them as:

A knowledge repository (KR) is a catalog of entities that contains entity type information, and (optionally) descriptions or properties of entities, in a semi-structured or structured format.

The knowledge repository contains, at the bare minimum, an entity catalog: a dictionary of entity names and unique identifiers. Typically, the knowledge repository also contains the descriptions and properties of entities in semi-structured (e.g., Wikipedia) or structured format (e.g., Wikidata, DBpedia). Commonly, the knowledge repository also contains ontological resources (e.g., a type taxonomy)

A knowledge base (KB) is a structured knowledge repository that contains a set of facts (assertions) about entities.

Knowledge bases are rich in structure, but light on text; they are of high quality, but are also inherently incomplete. This stands in stark contrast with the Web, which is a virtually infinite source of heterogeneous, noisy, and text-heavy content that comes with limited structure.

Graphs have a long history, starting with Euler’s solution of the Königsberg bridge problem in 1736. Current popularity of graphs was clearly influenced by the introduction of Google’s Knowledge Graph in 2012, that acted as a semantic enhancement of Google’s search function that does not match strings, but enables searching for entities. Since then, the term knowledge graph became an umbrella term for a family of graph-based knowledge representation applications that contain semantically described content entities : DBPedia, YAGO, Wikidata, Yahoo’s Spark, Google’s Knowledge Vault, Microsoft’s Satori, Facebook’s entity graph etc.

Knowledge graphs have emerged as a core abstraction for incorporating human knowledge into intelligent systems and became the training ground for deep learning models. They enable flexible way to capture, organize, and query a large amount of multi-relational data and enable semantic linking of entities.

DBpedia is a “database version of Wikipedia.” More precisely, DBpedia is a knowledge base that is derived by extracting structured data from Wikipedia. Over the years, DBpedia has developed into an interlinking hub in the Web of Data.

YAGO (Yet Another Great Ontology) is an open source knowledge base developed at the Max Planck Institute for Computer Science in Saarbrücken. It is automatically extracted from Wikipedia and other sources. As of 2019, YAGO3 has knowledge of more than 10 million entities and contains more than 120 million facts about these entities. The information in YAGO is extracted from Wikipedia (e.g., categories, redirects, infoboxes), WordNet (e.g., synsets, hyponymy), and GeoNames.[5] The accuracy of YAGO was manually evaluated to be above 95% on a sample of facts. To integrate it to the linked data cloud, YAGO has been linked to the DBpedia ontology[7] and to the SUMO ontology. YAGO has been used in the Watson artificial intelligence system.

Wikidata is a free collaborative knowledge base operated by the Wikimedia Foundation. Its goal is to provide the same information as Wikipedia, but in

a structured format.

Search Types

Traditionally, there are three types of search queries: keyword, structured, and natural language queries.

Keyword queries have become the standard of information access. Keyword queries are easy to formulate, but—by their very nature—are imprecise.

Structured queries structured data sources (databases and knowledge bases) are traditionally queried using formal query languages (such as SQL or SPARQL). These queries are very precise. However, formulating them requires a “knowledge of the underlying schema as well as that of the query language”. Structured queries are primarily intended for expert users and well-defined, precise information needs.

Natural language queries: Information needs can be formulated using natural language, the same way as one human would express it to another in an everyday conversation. Often, natural language queries take a question form. Also, such queries are increasingly more spoken aloud with voice search, instead of being typed.

5. Entity-oriented search

Entity-oriented search is a range of information access tasks where “entities” (or “things”) are used as information objects, instead of “words” (or “strings”). The enabling component of rich results are large-scale structured knowledge repositories (also known as knowledge graphs), which organize information around entities, rather than URLs as the primary record structure in an information retrieval system.

What is an entity?

An entity is a uniquely identifiable object or thing, characterized by its name(s), type(s), attributes, and relationships to other entities.

Entities are used as:

Unit of Retrieval: According to various studies, 40–70% of queries in web search mention or target specific entities [20, 23, 32]. These queries commonly seek a particular entity, albeit often an ambiguous one (e.g., “harry potter”) or a list of entities (e.g., “doctors in barcelona”).

Knowledge Representation: Entities help to bridge the gap between the worlds of unstructured and structured data: they can be used to semantically enrich unstructured text, while textual sources may be utilized to populate structured knowledge bases.

For an Enhanced User Experience: When presenting retrieval results, knowledge about entities may be used to complement the traditional document-oriented search results (i.e., the “ten blue links”) with various information boxes and knowledge panels (like it is shown in Fig. 1.1). Finally, entities may be harnessed for providing contextual recommendations.

Entity properties:

Unique identifier: Entities need to be uniquely identifiable and there must be a one to-one correspondence between each entity identifier (ID) and the (real-world or fictional) object it represents. Examples of entity identifiers include email addresses for people (within an organization), Wikipedia page IDs (within Wikipedia), and unique resource identifiers (URIs, within Linked Data repositories).

Name(s): Entities are known and referred to by their name, usually a proper noun (e.g., “ Tesla Motors, Inc”, “Barack Obama”).

Type(s): Types can be thought of as containers or semantic categories that group together entities with similar properties. Schema.org has over 840 types. An analogy can be made to object oriented programming, whereby an entity of a type is like an instance of a class.

Attributes are the characteristics or features of an entity. (e.g., the attributes of a populated place include latitude, longitude, population, postal code, country, continent).

Notice that some of the items in these lists are entities themselves (e.g., locations or persons). We do not treat those as attributes but consider them separately, as relationships.

Attributes always have literal values; optionally, they may also be accompanied by data type information (such as number, date, geographic coordinate, etc.).

Relationships: Relationships describe how two entities are associated to each other. From a linguistic perspective, entities may be thought of as proper nouns and relationships between them as verbs. For example, “Homer wrote the Odyssey” or “The General Theory of Relativity was discovered by Albert Einstein.” Relationships may also be seen as “typed links” between entities.

Entity score

According to Google’s 2015 Patent: Ranking Search Results Based On Entity Metrics, the entity score is based on four metrics:

- relatedness

- notability

- contribution

- prize

Synthetic Queries and Augmentation Queries

In March 2018 Google was granted a patent Query augmentation that involves giving quality scores to queries.

Word2Vec

Entity Ranking

Entity Linking

Populating Knowledge Bases

Understanding Information Needs

The Anatomy of an Entity Card

Synthetic Queries and Augmentation Queries

How to analyse text with Google’s Natural Language API

Google are happy to give us one their Natural Language API and I can you can use this for free. Scroll down the page it is an API but you can cut and paste some takes straight into the into the screen to get an idea of how it works

How to use Google Trends for Entity SEO research

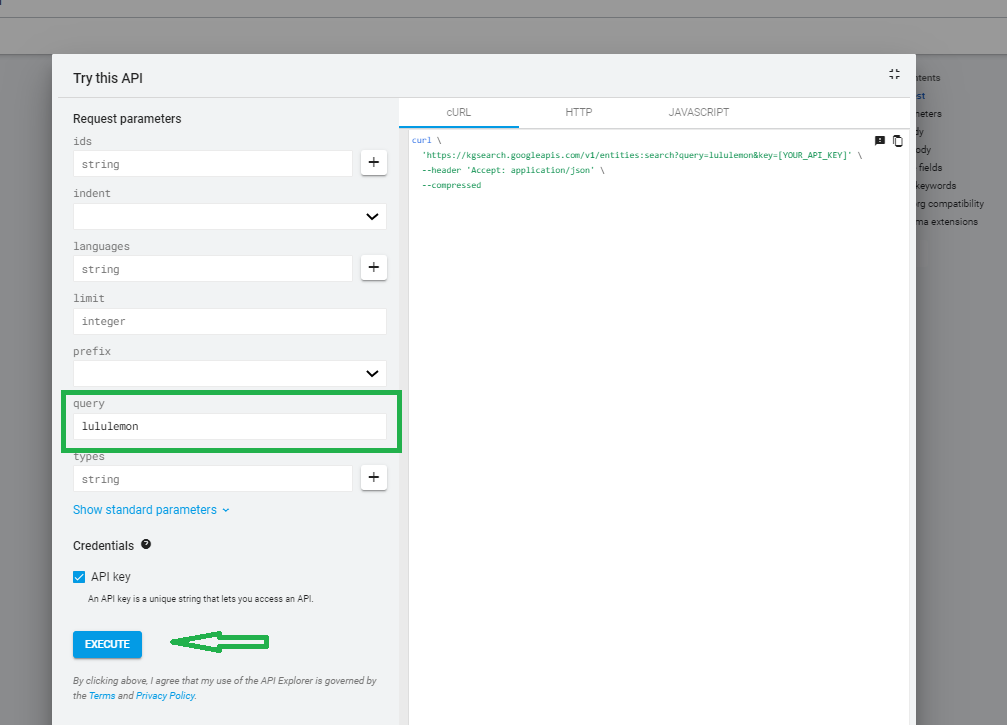

Search for entities with the Knowledge Graph Search API

Google created the Knowledge Graph Search API that to allows us to query Google’s Knowledge Graph database.

Access the page:

Add your search term In the “Query” field.

Then click execute.

Result for “Lululemon” (Dec 2020)

Notice the:

@id”: “kg:/m/…”

This is your: canonical URI for the entity. This is the knowledge graph code that you should aim to optimise if you have one.

“kg” means that the data comes from Google’s “Knowledge Graph”.

“m” usually seems to mean the data was sourced from Google’s purchase of Freebase and was expected to be migrated over to WikiData but it is not clear whether this migration was ever completed. If this was a “g” instead, the data is sourced in Google’s own proprietary dataset.

If you dont have one and zero entities exist for your query, you might want to consider creating one for your brand.

Entity Strategy for Brands

This sequence has been created by Jason Barnard who popularized the idea of Brand SERP. He strongly recommends to build you knowledge panel from your ‘About Us’ page instead of the ‘Home’ page as a web page that is about one thing and that is the brand. Google tends to like if one page represents one entity because it is more simple to understand.

- Give your entity a home

- Provide information about the entity

- Use schema markup to be explicit

- Find and create corroboration on third-party sites

- Create signposts

- Go to step 2 for each piece of new information

Look up your main competitors and look up for following:

- Which pages are marked up: home pages, product pages, product/offer/service category pages etc.

- What types and properties are used.

- Which SERP features is your competitions getting.

- Are the listed on the Knowledge Graph

Your first strategic decision is whether you want your brand to become a fully defined entity on the Google’s knowledge graph. Once you get listed, that entity will be then continuously updated in the knowledge graph. If you are a clothing store, for example, then marketing your new T-shirt organically becomes much easier. The knowledge base will simply update, showing the new T-shirt. This immediately creates a short vector between the T-shirt and your brand and the relationship is defined.

Competitor analysis

Look up your competitors and look up for following:

- Which pages are marked up: home pages, product pages, product/offer/service category pages etc.

- What types and properties are used.

- Which SERP features is your competitions getting.

- Are the listed on the Knowledge Graph

Your first strategic decision is whether you want your brand to become a fully defined entity on the Google’s knowledge graph. Once you get listed, that entity will be then continuously updated in the knowledge graph. If you are a clothing store, for example, then marketing your new T-shirt organically becomes much easier. The knowledge base will simply update, showing the new T-shirt. This immediately creates a short vector between the T-shirt and your brand and the relationship is defined.

Why JSON-LD?

Microdata and RDFa can be used to output structured data on a website , however we will focus on Google’s favorite format: JSON-LD (JavaScript Object Notation for Linked Data).

JSON-LD is a lightweight method of encoding linked data using JSON in JavaScript and other Web-based programming environments.

I recommend using JSON-LD syntax for the following reasons:

- JSON-LD is considered to be simpler to implement, due to the ability to simply paste the markup within the HTML document.

- It’s both machine and human understandable.

- Search engines like Google are creating enhancements and some rich results are only achievable with mark-up implemented by JSON-LD. This means that as search engines roll out newer, fancier search features, your Schema markup will be ready for these in the future.

- JSON-LD is also much easier to scale and work with than other formats.

The syntax of JSON

Let’s have a quick look at the basic syntax. JSON syntax is basically considered as a subset of JavaScript syntax; it includes the following:

- Curly braces hold objects

- Square brackets hold arrays

- Data is represented in name/value pairs.

- Name-value pairs have a colon between them as in “name” : “value”.

- Names are on the left side of the colon. They need to be wrapped in double quotation marks, as in “name” and can be any valid string.

- JSON values are found to the right of the colon. There are 6 simple data types:

-

- string

- number

- object (an collection of value pairs)

- array

- boolean (true or false)

- null or empty

Each name-value pair is separated by a comma. JSON looks like this:

Curly braces

All the structured data will live inside two curly braces. Keep them clean and indented if there is more then one.

Square brackets

“[” and “]” are used for arrays. JSON arrays are ordered collections of objects.

Commas

“,” are used to separate name-value pairs. Commas are also used to set the expectation that another name-value pairs is coming.

Quotation marks

Every Schema type, or property, or fill in a field, will be wrapped in quotation marks.

Colon

“:” is used to separate a name from a value. “name” : “value”

How to write JSON-LD code

You don’t need to learn how to write JSON-LD. You can use generators to produce the code and then enrich it. If that is case jump to Part 1. If you would like to learn how to write JSON-LD code follow the upcoming 5 steps and without getting into all complexities, I will list those instances most commonly encountered by and useful to SEOs.

You will have to memorize or copy/paste three immutable tags.

1.Call the script:

The HTML script element is used to embed executable code or data. It is typically used to embed or refer to JavaScript code but in our case it will be used to embed data with JSON-LD.

It lets the web browser know that you are calling the JavaScript that contains JSON-LD. After the script tag use curly brackets { } to wrap an object, and properties. If you forget to close an open bracket your JSON-LD wont be parsed.

Basic JSON-LD code looks like this:

2. Reference schema.org vocabulary

2nd tag you have to mémorise is:

“@context”: “https://schema.org”

@context defines the vocabulary that the data is being linked to. In this case it lets the browser know that you are referencing schema.org.

3.Specify the item type

The final element you need to memorize is the @type specification. Everything after is data annotation. @type specifies the item type being marked up. You can find a comprehensive list of all item types here.

In this case the item type is “LocalBusiness”

4.Define Properties

Items have “Properties” and “Values”. E.g., for: “name”: “Rush Media Agency”, “name” is a Property and “Rush media Agency” is a Value

Once we reference a Type, any Property that can apply to that Type can be called and defined. In this case the Properties are “name”, “image”, “logo”, “url”, and “description”.

Use square brackets [ ] for situations where there are multiple values for an item property. A common use is to leverage the “sameAs” item property for listing multiple social media platforms.

5. Nested item properties

Nested item properties are item types within item types. Nested item properties start off like normal item properties, however after the colon you can start by declaring a new item type and therefore all item properties now belong to the new item type. Open a new set of brackets and define the properties of your new entity. After closing the bracket, you will be back to defining the properties of the parent entity.

Here’s an example of nested structured data, where Recipe is the main item, and aggregateRating and video are nested in the Recipe.

5.Create an array

Create an array with “[” and “]”. Arrays are ordered collections of objects.

5. Create a node array using @graph & @id

Open with “@graph” and an open bracket, which calls an array. Then you can insert a series of nodes.

You should identify your nodes with @id. The @id allows you to give a node a Uniform Resource Identifier (URI). This URI identifies the node. “@id” and “isPartOf” calls, which define, establish, and connect items within the array.

“isPartOf”:{“@id”:”https://example.com/#website”}

Part 1: Choose a “type” and its “properties”

There are over 840 schema “types” (classes or entities). Here are the most popular “types”: Article, Event, JobPosting, LocalBusiness, Organization, Person, Product, Recipe. “Types” are defined and connected with “properties”. For examples schedule can be added to an Event type with the eventSchedule property, ticketing information may be added via the offers property etc. It is worth pointing out that “types” are written in PascalCase and “properties” are written in camelCase.

Properties are either required or recommended.

- Required properties for LocalBusiness are: @id (URL), name of business, image (logo) and address

- Recommended properties for LocalBusiness are: geo (latitude, longitude), telephone, openingHours, etc.

Start with main pages: Home Page, Product page, About Page, Our Team, Contact Us and other pages that are ranking well and are generating the most traffic. Keep in mind that 90% of rich results are triggered by the top five ranking positions.

Organization and LocalBusiness

Organization schema entity represents an organization such as a corporation, a school, NGO, etc.

LocalBusiness represents a particular physical business or branch of an organization. Examples include a restaurant, a branch of a restaurant chain, a branch of a bank, a hair salon, etc. Instead of using the generic “LocalBusiness” schema, you can use this spreadsheet to determine which schema type describes your business. The core mark-up features are: LocalBusiness, PostalAddress and AggregateRating.

FAQPage, HowTo and Speakable

Once you are done with main pages, consider creating a FAQ Page or a How-to Page or have a product page that contains an FAQ about the product. Here is how to go about it:

- Find out what is a simple question in your market space.

- Come up with a straightforward answer that is summarized in a short paragraph or with a bullet point list.

- Add a relevant image.

- Implement the FAQPage or HowTo and a Speakable schema (for Speakable schema, use 2-3 sentences maximum as the text shouldn’t take more than 30 seconds to read).

This will let you be eligible for a collapsible menu under your SERP and for voice search results. Speakable schema markup allows you to tell the digital assistants what can be used as a voice answer on the page.

In order to find questions you can use a tool called Answer the Public. When you enter a keyword, it will return a number of questions and suggestions.

Rating, AgreggateRating & Review

AggregateRating represents the average rating based on multiple ratings or reviews.

Review is a review of an item e.g. product or movie.

Rating is used for an individual rating given for an item.

This example forms the aggregate rating code:

Article

There are multiple types of article schema that can be added: NewsArticle or BlogPosting being the most common. A NewsArticle is an article whose content reports news, or provides background context and supporting materials for understanding the news. If the content of the webpage represents a blog post then use the top-level type such as BlogPost.

BlogPosting

Use BlogPosting for individual post. Recommended properties are: About, Article Body, Offers (if the blog relate to an offering), Video ( if the blog have a video in it), Time required – How long does it take to read this blog? Format: for example, 1 hour 10 minutes would be P1H10M

Service Schema

A service provided by an organization, e.g. delivery service, print services, etc.

SpeakableSpecification

A SpeakableSpecification indicates (typically via xpath or cssSelector) sections of a document that are highlighted as particularly speakable. Instances of this type are expected to be used primarily as values of the speakable property.

CollectionPage

The “CollectionPage” is useful when there is a collection of items without a specific hierarchy. The Collection type is defined to be for collections of “Creative Works or other artefacts”. Generally “CollectionPage” contains content about the collection in addition to the collection items. WebPage conveys “Here is a web page”, while ItemPage conveys “Here is a web page about a single thing”, and CollectionPage conveys “Here is a web page about a group of things”.

Here is “CollectionPage” for a training page for a software app with: review, name, author, offer, article, FAQ page, and description.

Person

A person schema can be used for a real or fictional person. It’s often used to define an author of an article or to describe a person’s role within an organization.

VideoObject

The video schema markup provides more information about the content on a page with an embedded video. Fail to implement it on your website, and your videos pages will be less visible than those of sites that are using video snippets.

SearchAction

According to Google’s documentation for Sitelinks Searchbox you need to start by creating a website data item for your homepage. Then you can use the action field to create a search action data item:

Breadcrumb Schema

A breadcrumb trail on a page indicates the page’s position in the site hierarchy. It is a type of secondary navigation scheme that reveals the user’s location in a website. The term comes from the Hansel and Gretel fairy tale in which the two title children drop breadcrumbs to form a trail back to their home. You can usually find breadcrumbs in Ecommerce or any other large website that have a large amount of content organized in a hierarchical manner. You also see them in Web applications that have more than one step. You will have to add breadcrumb schema to all pages you would like to get included.

You shouldn’t use breadcrumbs for single-level websites that have no logical hierarchy or grouping. Breadcrumb navigation should be regarded as an extra feature and shouldn’t replace effective primary navigation menus.

Schema for eCommerce Category Pages

Google doesn’t allow product markup on category level. ItemList with product markup seemed to be the most recommended approach for product-listing pages.

Use ItemList to identify a list of items. This includes products on your category or subcategory page. Within ItemList you will also use ListItem which identifies each item in the list.

Ecomm marketplace with multiple sellers for single product

If you want to apply structured data product markup for a marketplace website, where five sellers are selling the same product. Each seller has different price and different rating. The seller gets his aggregate rating by his buyers (the rating is for the product).

You can include: product details, price, rating, availability.

AboutPage

An About page is a page where your readers/visitors learn more about you and what you do. It often tells the story of the site owner’s journey from the start to finally achieving success.

ContactPage

A contact page should include both an email address and a contact form for visitors to fill out. You can also include a business address, phone number, or specific employee/department contact information.

JobPosting

Your job posting should make both the offered role and your organization stand out by appealing to the interests and preferences of the specific people you want to attract.

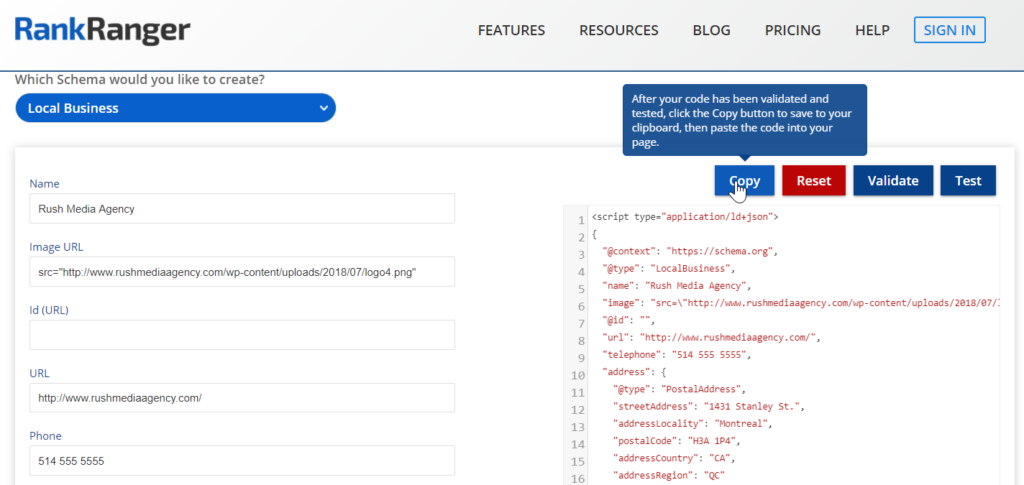

Simple method: Generate the JASON-LD code

Choose a generator that is the best fit for the schema you would like to implement. Some of it comes to personal preference and some generators have options that others dont. Keep in mind that you can always easily modify and customize the code once its generated.

Schema Markup Generators

- Schema Markup Generator by Merkle.

- Google’s Structured Data Highlighter allows you to visually tag elements of your web page so that Google can generate the appropriate schema markup.

- RankRanger Schema Markup Generator

- Steal Our JSON-LD Generator

- Microdata generator

- JSON-LD Generator by Hall Analysis.

Part 3. Customize and test your code

Once you generated the code paste it into the Google’s Structured data testing tool and verify if there are warnings or errors.

You can use the testing tool to customize your schema. You can add additional properties and make your schema extremely rich. For example for LocalBusiness schema you can add properties such as:

- “sameAs” to add social media listings

- “hasMap” to connect maps to the business

- “areaServed” to add a wikidata entity for the region

- “geo” for geocoordinates

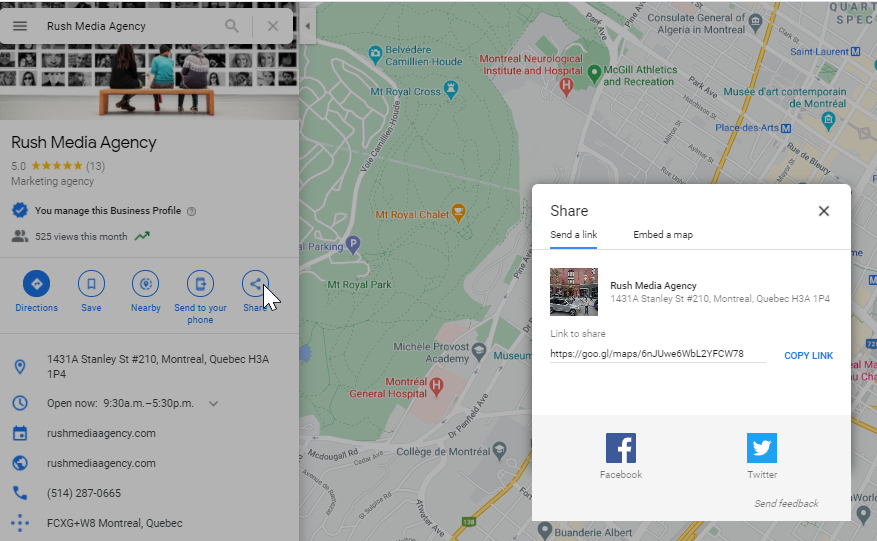

Here is a sample of a enriched LocalBusiness schema for a Rush Media Agency home page:

To get the map link for “hasMap” locate your business listing in Google Maps. Use the menu top left search bar and select share or embed.

You can use this geographic tool to get the lat long coordinates from an address. Type the address which would include the name of the city/town, state and street name to get more accurate lat long value.

For “areaServed” use a Wikidata link. Wikidata is a collaboratively edited knowledge graph hosted by the Wikimedia Foundation. It acts as a “central storage for the structured data of its Wikimedia sister projects including Wikipedia, Wikivoyage, Wikisource, and others”. Wikidata, Wikipedia, the CIA Factbook and Google’s Knowledge Graph are the main structured data sources that allow Google to provide logical answers.

Besides using Wikidata to define your entities with properties such as about, mentions, and subjectOf, you can also leverage Wikidata to gain a Google Knowledge Graph result:

- Create a Wikipedia profile.

- Create a profile on Wikidata.org profile

- Implement organization schema markup on your website and specify the Wikidata and your Wikipedia page links using the SameAs proprety.

As an optional step, you can Product Ontology values in your code with “additionalType” like so:

You can also add “Aggregate Rating”:

You can also include “Reviews” with “aggregateRating” as a nested entity. Simply copy and paste the review item and adjust the aggregate “reviewCount” and “ratingValues”.

Part 4. Deploy the code on your web page

Schema JSON-LD markup can be implemented by:

- Manually adding the JSON-LD Schema markup: adding it manually doesn’t scale, but if you don’t have too many pages, it shouldn’t cause a problem. Google’s Data highlighter will be useful here.

- Your Content Management System (CMS): If you’re adding Schema to many pages, it makes sense to have functionality for that in your CMS, re-using your existing fields for Schema purposes. My favorite plugin for WordPress is: the Markup (JSON-LD) structured in the schema.org plugin.

- Tag Manager (not recommended): SEO experts like implementing schema markup through the Tag Manager, because it doesn’t require going through development. I don’t recommend it because this relies on JavaScript to be executed, and Google has limited resources available for this. In practice, this means that it will take a lot longer for your schema implementation to be picked up and shown by search engines. It will again take a lot longer compared to implementing it directly in HTML. On top of that, several search engines – Yahoo, Yandex, and Baidu – don’t execute JavaScript at all. It appears as if Bing is slowly starting to execute Javascript, but they are still far from where Google is right now in terms of their scale and abilities.

When implementing structured data, don’t forget Google’s guidelines to being accepted for enhanced snippets, and to prevent a possible Google penalty.

Part 5. Monitor and measure the business impact

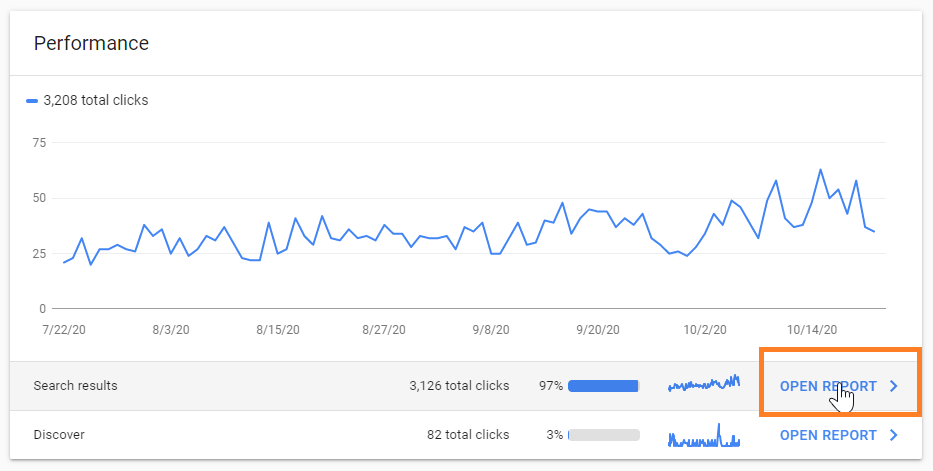

Once you implemented schema you can evaluate the impact by looking at individual pages with Google Search Console that will provide you with:

- Total clicks

- Total impressions

- Average CTR

- Average position

When you log into Search Console click on “Open Report”.

You can choose a specific page:

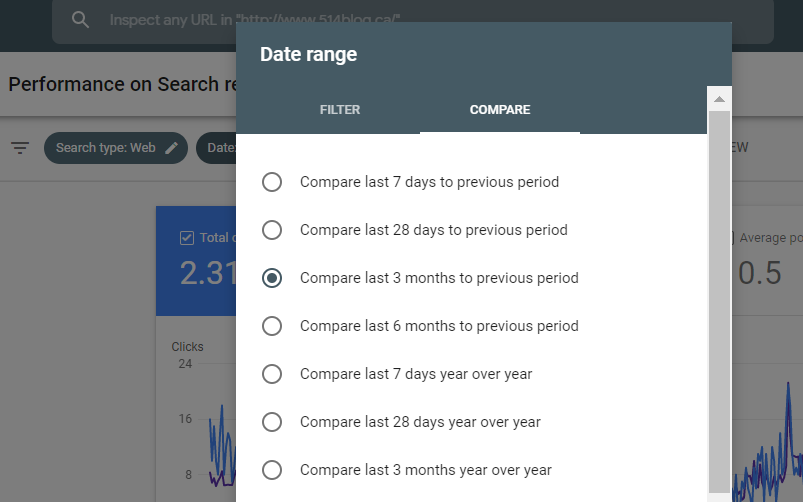

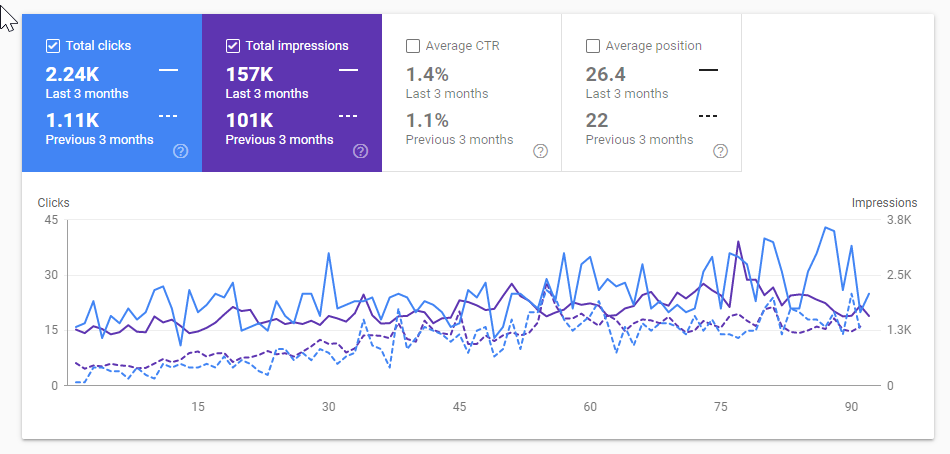

You can add a date filter and compare periods:

Compare the click-through rates of pages with schema to the click-through rate of the page before schema, or with the site as a whole or with pages without schema.

You can also compare pages with rich results to those with no rich results

Pages with rich results will have a higher CTR. You can also calculate the percent of impressions or clicks that your rich result segment is achieving compared to pages without rich results or with the sitewide performance.

Case studies:

- Case studies published by Schema App

- Case study about Rakuten’s home chef recipe

- Inlink experiment about “About” & “Mentions” types

Google Patents:

- Browseable fact repository – Filled: 2006; Granted: 2010

- Query augmentation – Filled 2015; Granted: 2018

Tools:

- Structured Data Testing Tool – Google

- JSON-LD Playground – json-ld.org

- Schema.org & Microdata Generator – microDATAgenerator

- Markup Validation Tool – Bing

- Flesch-Kincaid readability test tool

- LocalBusiness” schema types spreadsheet

Ressources:

- Semantic Capital: Its Nature, Value, and Curation by Luciano Floridi

- A Brief History of Knowledge Graph’s Main Ideas: A tutorial

- Towards a Definition of Knowledge Graphs

- JSON for linking data: JSON-LD

- Schema Markup Training by Schema App

- Local Business Structured Data / Schema by Tim Capper

- Podcast: The Entity Hour with Bill & Terry

- Voice Search: A No-Nonsense Guide

- Entity Orientated Search: Krisztian Balog

- Structured Data Markup Helper – Search Console Help

- JSON Basics Tutorial

- RDF at w3schools.com

- W3C’s RDF validator

- The Semantic Web Made Easy by W3C

- Whatever Happened to the Semantic Web? by Two-Bit History

- Programmable Web by Aaron Swartz

- The Internet’s Own Boy: The Story of Aaron Swartz (Must Watch Documentary 2014)

- General structured data guidelines

Plug-ins:

WordPress

- Markup (JSON-LD) – Kazuya Takami

- Schema Creator – Raven

- WordPress SEO by Yoast – Joost de Valk

- WordLift

Magento

- Schema.org Extension – Iuvo Commerce

- MSemantic: Semantic SEO for Rich Snippets in Google & Yahoo – semantium

Joomla

- J4Schema – Davide Tampellini

Drupal

- Schema.org – Drupal